🚀 Rethink AI at the Edge

Affordable Rackmount AI Server for Open-Source, RAM-Hungry Workloads

Not your typical AI server.

While most AI servers chase the biggest, flashiest GPUs (and the five-or-six-figure price tags to match), we’ve taken a smarter, leaner approach. Our rackmount AI server is built for real-world developers and small teams who want powerful local AI capabilities - and full control, and privacy - without breaking the bank.

💡 Optimized for Open-Source AI

From Convolutional Neural Networks, Large Language Models (LLMs), Music, Image and video generation, to computer vision, open-source AI tools are exploding - and many don’t need the latest ultra-premium GPU. Instead, they need RAM. Lots of it. Our server is built around that insight:

- Up to 2TB of DDR4/DDR5 ECC RAM (more by custom quote)

- COTS GPUs (readily available consumer/workstation cards like the RTX 4070, 4080, 50x0, 3090, or even A4000s)

- No vendor lock-in: 100% compatible with PyTorch, TensorFlow, Ollama, LocalAI, Jan, and other open-source tooling

- Runs models locally—no cloud subscription, no API rate limits, no leaking data to third parties, no quotas, no snooping

🧠 Why RAM Matters More Than You Think

When you’re fine-tuning models or running LLMs like Mistral, Mixtral, or LLaMA 3/4, total RAM can be the bottleneck—not raw GPU horsepower. Our system is built to hold models entirely in memory, with smart paging that minimizes GPU swap time and accelerates inference on mid-range cards.

🛠️ Built With Flexibility in Mind

Whether you're running Ollama on a 70B model or experimenting with custom diffusion pipelines, this server keeps up.

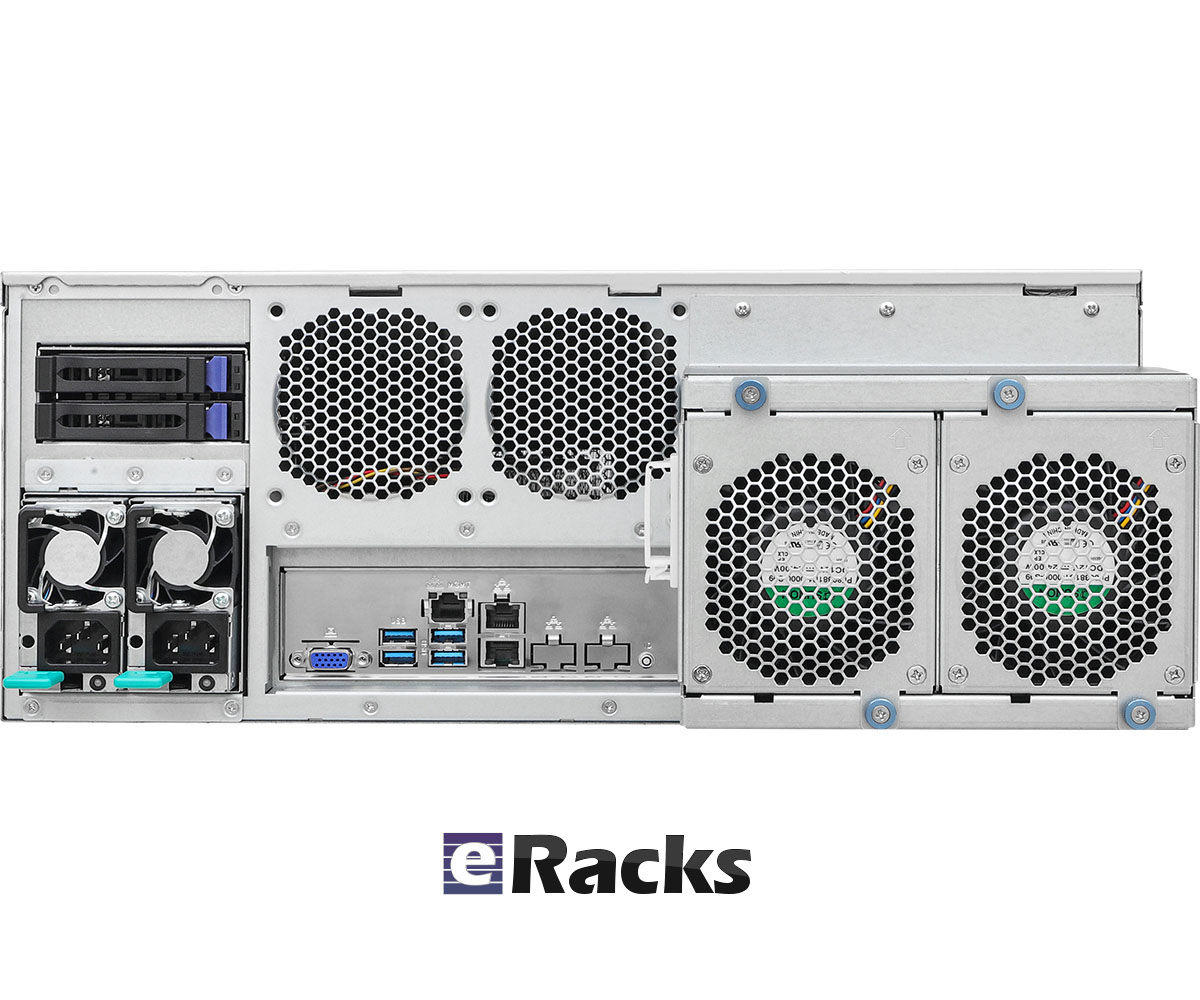

- Standard 4U rackmount chassis

- Multi-GPU capable (up to 4x dual-slot cards)

- Tool-less maintenance & modular layout

- Runs Ubuntu, Rocky Linux, or Proxmox with Docker/Podman support out of the box

- Power-efficient PSU options (platinum-rated available)

🔓 Local. Private. Yours.

Tired of sending sensitive data to third-parties just to get results? Our server keeps everything on-premises. It’s your stack, your data, your control.

Perfect for:

- AI developers & ML researchers

- Privacy-focused orgs

- On-prem LLM deployment

- Edge AI applications

- Open-source model enthusiasts

💬 About the AINSLEY name

There have been questions about the AINSLEY name, and why it doesn't have a cutesy acronym like our other AI models, the answer is that this was our first AI model released in 2013, custom-designed for a major California University, and at the time, was named for Aynsley Dunbar (Journey, John Mayall, Frank Zappa, Jeff Beck, Jefferson Starship, Eric Burdon, Lou Reed, David Bowie, Whitesnake, Pat Travers, Sammy Hagar, Michael Schenker, Leslie West, and Keith Emerson, among others).

That said, for those of you that really want an acronym, here are a few (albeit tortured, contrived, etc) possibilities:

- Advanced Inference Node for Scalable Local Enterprise Yield

- Accelerated Intelligent Node for Scalable LLM Engine Yield

- Advanced Inference Node - Scalable Learning & Experimentation Yield

- Advanced Inference Node for Scalable Local Experiments - Yours

- AI Inference Node for Scalable Local Enterprise - Yours

- AI-Intensive Neural Server for Large Experiments - Yes!

- Advanced Intelligent Node for Serious LLM Experiments - Yep

- Advanced Inference Node for Scalable Local Experimentation & Yield

Anyway, we'll stick with the Aynsley Dunbar inspiration :-)

💬 Ready to Ditch the Cloud?

If you're looking for an AI server that doesn’t cost more than a luxury car—and actually fits your stack—this is it.

Reach out for a build quote or demo.

AI should be accessible, private, and efficient. Not merely expensive.

eRacks Open Source Systems

eRacks Open Source Systems